It’s a familiar scenario to most of us, navigating your way through unfamiliar territory by following the verbal prompts provided by your trusty GPS device. “Turn right on Main Street and then get in the left lane,” says the electronic voice. You activate your signal light, scan the right-hand side of the road, locate the street sign, verify that it says Main Street, turn your car at the corner and then, once on Main Street, you pull to the left to get into the left lane, just as your GPS directed you to do. Easy for you. Not so much for your robot. Of course, many robots are perfectly capable of receiving and responding to GPS data electronically, but responding to voice commands and recognizing landmarks described in those commands is as difficult for your bot as it would be for your cat.

The good news for your bot is that researchers at Purdue University are working on technology that would help robots understand and respond to language, enabling everyone from emergency responders to military operators to individuals with physical limitations more easily direct robots to a certain location simply by describing the route.

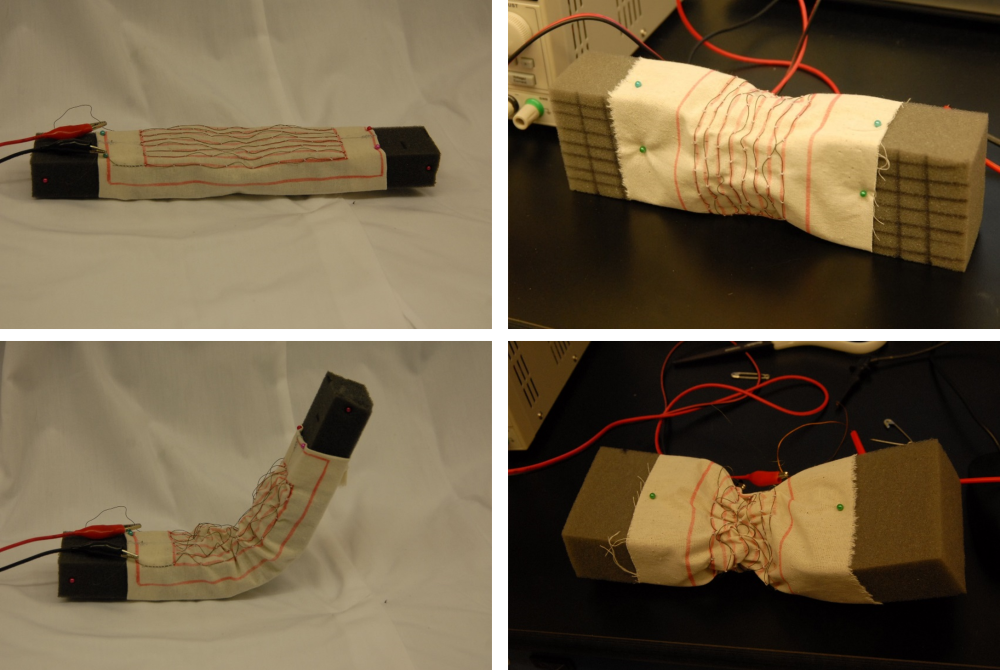

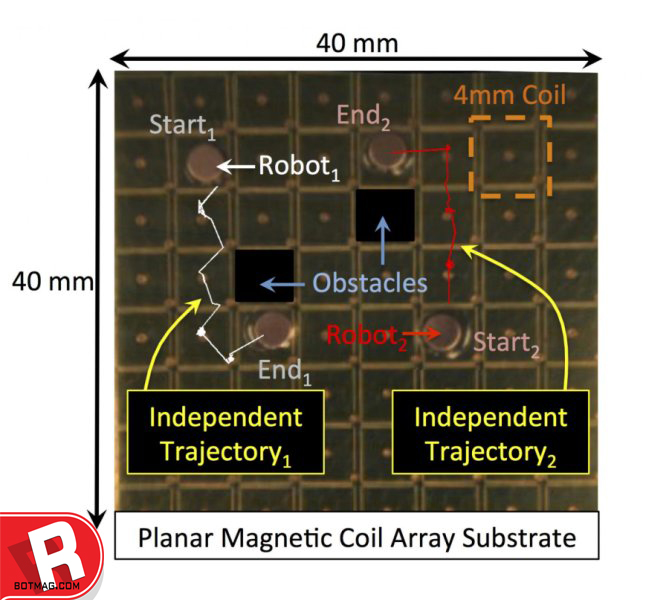

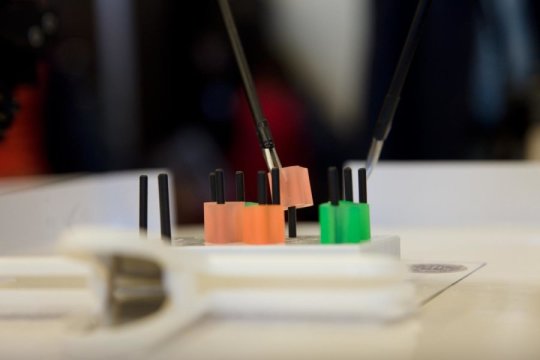

Led by Associate Professor Jeffrey Mark Siskind of Purdue’s School of Electrical and Computer Engineering, the team of researchers began by mounting several cameras on their six-wheeled robot, Darth Vader and created three algorithms that enabled it to recognize objects it traveled around in conjunction with the direction in which it was traveling on a closed course while being directed remotely by an operator.

“It was able to generate its own sentences to describe the paths it had taken. It was also able to generate its own sentences to describe a separate path of travel on the same course,” Siskind said. “The robot aggregated its sensory data over numerous experiences.”

The research was funded by a 2015 National Robotics Initiative grant as part of the Initiative’s mission to stimulate development of collaborative robots. Siskind and the team at Purdue are hoping to take the technology further and are seeking collaborators and additional funding to do so.

Scott Bronikowski, a military veteran and former doctoral candidate working with Siskind, developed the idea and expressed interest a real-world application for the technology.

“We do a lot with robots in the Army,” Bronikowski said. “But what we call robots really are just rugged remote control vehicles, and that means one soldier is controlling the robot and another soldier needs to watch over him or her. That makes the soldier vulnerable, and I thought if we had a robot that could be controlled by voice it would make things much easier and safer. I was thinking something like R2D2 from ‘Star Wars.’”

For more information, visit Purdue University’s website

Robot Magazine The World's Leading Robot Source

Robot Magazine The World's Leading Robot Source